- Navigator

- Organizational Planning

Artificial intelligence (AI) is reshaping industries worldwide, delivering efficiency gains, predictive insights, and improved decision-making, while also completing tasks and communicating productively with humans.

For government and quasi-government organizations, such as economic development organizations (EDOs)—entities charged with serving the public good—the adoption of AI represents a transformative opportunity, but it will require an understanding of these building blocks:

- Software

- Data

- AI’s agentic capabilities

EDOs that strategically integrate these resources, as well as support staff upskilling, will succeed in automating processes, boosting productivity, and better leveraging data for positive community economic development outcomes.

This series of articles explores the concepts and components of AI, key terms, and how this technology can work to improve EDO workflows. It also provides a practical overview of AI’s more advanced applications, the likely barriers to early adoption, and the importance of training and data collection methods that will best position EDOs to become more effective and efficient.

EDOs often struggle with fragmented, siloed, or incomplete data sets, which make it difficult to draw meaningful insights and inform strategic decisions. Legacy systems, inconsistent data collection methods, and limited integration between software platforms can further hinder their ability to access, aggregate, and analyze information in real time.

An organization’s ability to fully capitalize on the opportunities AI will offer, beyond its generative capabilities, will require a deeper understanding of the technology and the data and software it requires. The adoption challenges will be significant, as many EDOs lack personnel with advanced data literacy or AI expertise.

Employees may feel overwhelmed by the rapid pace of technological change, uncertain about how to leverage new AI tools, or concerned about the impact on their roles. However, by integrating AI-driven solutions into day-to-day workflows, EDOs can free up staff to focus on high-value activities, foster a culture of innovation, and upskill their teams to meet the demands of a data-driven future.

The purpose of these articles is to provide the necessary background so EDO leaders can better prepare their teams for the AI revolution.

What is AI?

At its core, AI is made possible with machines, networks, software, coding, and data and information that, when combined, can perceive, reason, learn, predict, and act. These components include strong processing infrastructure (chips, CPUs, GPUs, TPUs, tokens and storage), information (data, text, documents, images, video, audio files, etc.), and frontend and backend software (CRM, web portal, spreadsheet, and/or an AI portal like ChatGPT, etc.) for querying, programming and displaying AI outputs or results.

For economic development leaders who aren’t tech experts, here’s a straightforward look at AI and the numerous components that make it work.

AI refers to the use of computers to perform tasks that typically require human intelligence. This includes understanding and using language the same way people do, spotting patterns or trends in information, and making suggestions about what actions to take—whether that’s fixing a problem, finding new opportunities, or getting things done quickly and more efficiently. AI is a subdiscipline of computer science that powers everything from hardware to programming languages, operating systems, and algorithms.

Its capabilities include things like:

- Quickly noticing patterns or trends in large amounts of information, such as recognizing images or photos

- Understanding and communicating using everyday language—both written and spoken

- Learning from experience and making better decisions over time, then clearly explaining those decisions

- Offering helpful recommendations to solve challenges or make the most of new opportunities

The Technology that Runs AI

The Technology that Runs AI

You have undoubtedly heard the term “large language model” or “LLM” when learning about AI. So, what is an LLM, and what role does it play in running AI?

An LLM, and there are many, is an enormous information or data warehouse. LLMs have scoured the Internet to inventory website content, PDF documents, images, videos, social media accounts, posts, and any other non-gated data, and have stored large parts of it or mapped where it can be found on the Internet.

I like to think of LLMs as massive libraries, connected to all the world’s websites and electronically available documents, databases, reports, audio, video, and image files. For example, when you use Gemini or ChatGPT to answer a question, the tool accesses its own LLM and sometimes others to provide a response.

Not all LLMs have captured the same information sources. Some LLMs have a specific information focus, such as an area of academic research or industry, or have become “aggregators” and access multiple LLMs. Many are attempting to build the largest LLM or “library” of information, supported by superior processing capacity, so that their model will be capable of providing the most relevant and superior output.

LLMs require massive storage capacity and energy. Imagine taking a significant portion of the world’s physical objects and constructing a building for them to all reside. Similarly, housing all the available data requires not only large data storage servers, but the processing capability to examine relevant information, organize it, identify and understand correlations, and provide a unique response. That response is unique to the LLM used.

LLMs, made possible with the combination of data, chips, and other hardware, all work together to accomplish tasks that result in artificially produced outputs that are capable of replacing human thought, tasks, and communication, including:

- Predictive Analytics: Tools that analyze historical and real-time data to forecast trends, risks, and needs.

- Natural Language Processing (NLP): Technology enabling computers to understand and generate human language.

- Machine Learning (ML): Algorithms that learn from data to identify and predict outcomes or classify information.

- Generative AI: Models that create new content (text, images, simulations) to assist in communication and problem-solving.

- Robotic Process Automation (RPA): Tools that automate repetitive, rules-based administrative tasks.

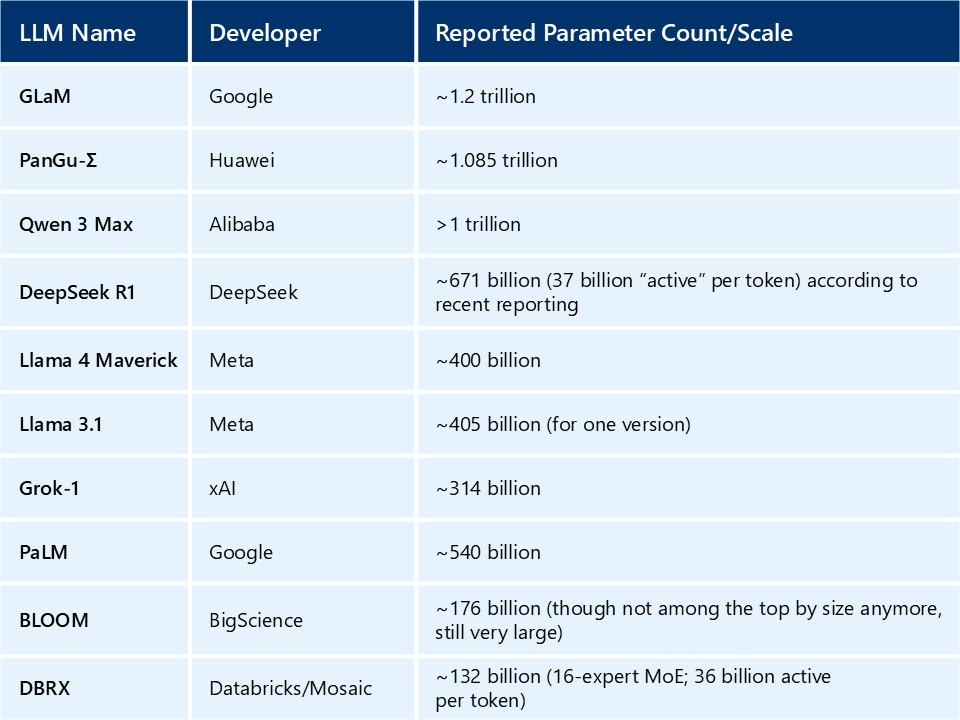

Currently (although changing daily), there is a short but growing list of well-known, open-source, large-scale LLMs in existence. These most-cited leaders have created models that exceed 70 billion (70B) parameters.

Parameters, as a form of measurement, are not the only characteristic that determines an LLM’s effectiveness. LLMs differ from each other in terms of size, accessibility, mixture of experts (MoE), data quality, and the effectiveness of their training or the way they have been programmed to associate words. Currently, the largest LLMs include: Note: Some models do not report parameter size (i.e., ChatGPT) and are not included.

Note: Some models do not report parameter size (i.e., ChatGPT) and are not included.

These are the big, foundation-scale open-weight LLMs that get cited in leaderboards. Models with fewer than 70B parameters can also be defined as LLMs and are estimated to number in the tens to low hundreds, according to Hugging Face. Many of these are created for tailored uses such as academic research, or a specific industry or type of data.

It’s important to note that each new version of these LLMs (i.e., ChatGPT just released 5.0) includes a greater amount of data, documents, websites, etc., and it isn’t until the next release that new or current information is included. As a result, LLMs become outdated the day after their release, and with each new version, they come with greater amounts of information and processing capability that improve performance and output.

It is also important to note, and relevant to why your organization’s ability to capture and create its own unique data is important, that LLMs only include information that is publicly available on the Internet. LLMs like Gemini or ChatGPT cannot access information or documents saved on the cloud, in server closets, or on a computer hard drive without permission. They also cannot access anything that is “gated” and requires a subscription login or that a form be filled out.

CPUs, GPUs, RAG, and Tokens: The Hardware and Technology that Make LLMs Intelligent

CPUs, GPUs, RAG, and Tokens: The Hardware and Technology that Make LLMs Intelligent

On the infrastructure side, a few key components are essential for understanding how LLMs and modern AI systems, such as ChatGPT or digital agents supported by it, actually work. Let’s review each of them and how they are working together inside these large data centers.

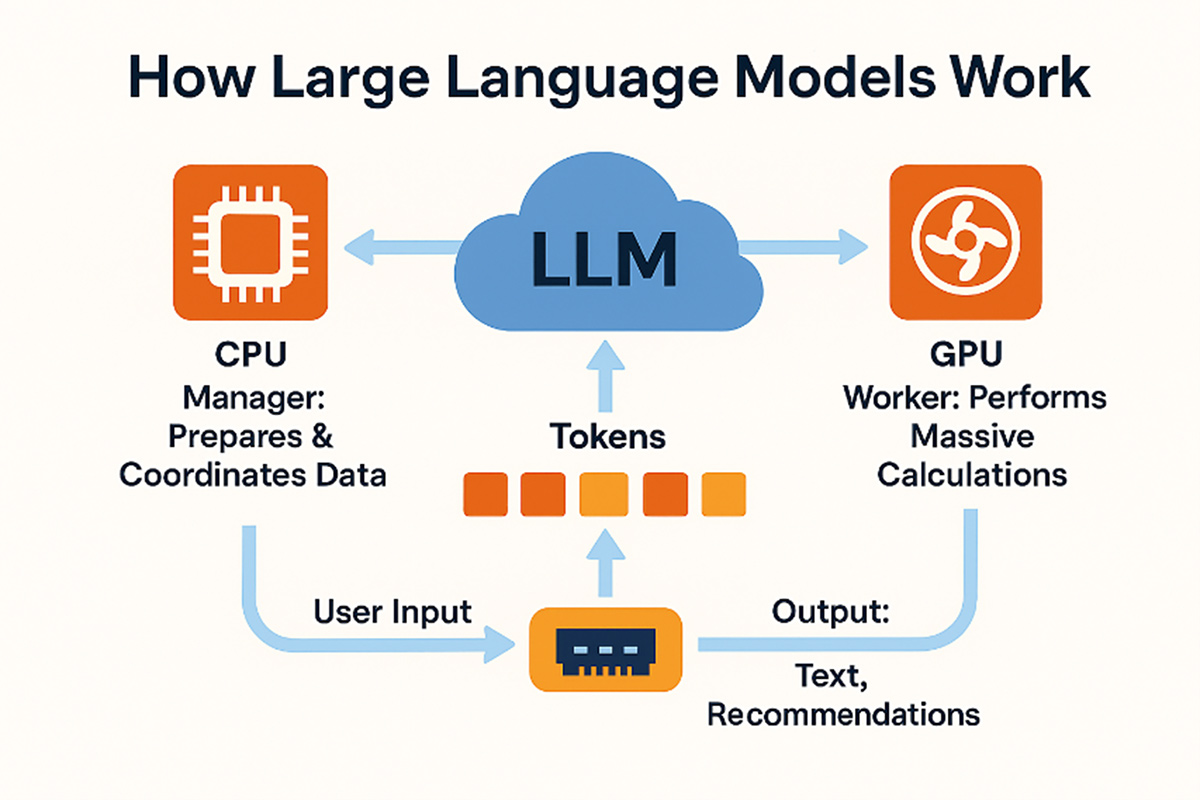

The Central Processing Unit (CPU) is the primary processor in a computer and is often referred to as the “brain” of the system. It handles general-purpose computing tasks like running your operating system, software applications, and everyday instructions.

For AI, the CPU coordinates its system, handling logic, memory management, and smaller calculations. A CPU can handle sequential tasks, but due to its now-limited processing power, it is unable to perform the complex mathematical operations required for deep learning. It is, however, very effective at managing the more powerful GPU (and now TPUs) and can execute simpler AI tasks.

Graphics Processing Unit (GPU): Originally designed and used to create 3D graphics for gaming consoles and other video and imaging systems, the GPU has become a vital technology component for AI due to its ability to complete thousands of calculations simultaneously and at higher speeds than a CPU.

The GPU component handles the heavy lifting, and when we use an AI application, it is running, processing data, and responding on a GPU located in a data center. For those following the significant data center investment announced recently, this is what is being built: Data centers filled with racks of GPUs that are storing greater and greater amounts of data, processing that data, and communicating it back to a user in a human-like manner.

Explained through an analogy, if training an AI model is like building a skyscraper, think of the CPU as the architect (smart, designing and managing), while the GPU is a vast army of construction workers laying the bricks and installing the wiring and pipes.

Tokens: An important component of the data process, tokens are units of text that AI models process, and they are also the source of revenue generation. In LLMs like ChatGPT, text isn’t processed word-by-word or letter-by-letter; It’s broken into tokens, which are smaller pieces of text. A token might be a single character, a few letters, or part of a word. For example, the sentence “AI is amazing!” could be segmented into five tokens:

-

- AI

- is

- amaz

- ing

- !

Tokens are critical because AI models are trained to predict the next token in a sequence. The more tokens you input or generate, the more computing power (and cost) it takes. Each model has a “context window,” which refers to the maximum tokens it can “remember” or process at one time. The more tokens a model can process, the more capable it is of computing data.

We will dive deeper into tokens and how they are used for processing language in a future article, but for now, it’s important to understand that when you ask an AI model a question or give it task instructions:

Your words are broken into tokens → a CPU prepares and manages this request → a GPU performs the massive neural network calculations to generate the next tokens, or your answer → the CPU assembles the results and sends them back to you.

Retrieval Augmented Generation (RAG) is a generative AI technology that helps address data integrity and the use and integration of various information sources. RAG allows AI to securely reference external data sources along with private documents to enhance its responses. This allows for AI to surface valuable local economic, labor, real estate, demographic, and other trends that might not otherwise be revealed through an LLM.

As an example, RAG would allow an EDO to tap into federal and/or vendor data sources and its own local data sets stored in a CRM or spreadsheet, potentially identifying unique insights and ultimately smarter public policy decisions or more timely and effective initiatives.

Part 2 in this series of articles will be published the week of January 12-16, 2026. It will focus on what makes AI and LLM searches different from, and superior to, traditional Internet searches.

If you would like a free preliminary AI organizational assessment or want to discuss how your organization might get started, please contact Rob Camoin, CEcD, at rcamoin@camoinassociates.com.

View The AI-Ready EDO series home page

About the Author

Robert Camoin, CEcD, is founder and current President of Camoin Associates, an economic development consulting firm that works with EDOs across the nation, and is currently leading the firm’s effort to build its first AI agent. He is also President and CEO of ProspectEngage®, which provides digital business retention and prospecting platforms supported by a closed-source data platform that now incorporates AI for user insight and efficiency.